The world of academic research is facing a critical challenge in the era of artificial intelligence, and a recent case from the University of Hong Kong (HKU) perfectly illustrates the potential pitfalls of AI misuse in scholarly work.

A research paper by HKU PhD student Bai Yiming has become the center of a significant academic integrity investigation after suspicions arose about potentially fabricated references. Published in October in Springer Nature’s “China Population and Development Studies” journal, the paper titled “Forty Years of Fertility Transition in Hong Kong” reportedly contains approximately 24 questionable references that may have been generated artificially.

The response from academic stakeholders has been swift and systematic. Springer Nature took immediate action by adding an editor’s note to the online version of the paper on November 14, signaling serious concerns about the reference accuracy. The publisher has committed to a thorough investigation following the guidelines of the Committee on Publication Ethics (COPE), demonstrating the academic community’s commitment to maintaining research integrity.

The paper’s co-authorship adds another layer of complexity to the situation. Paul Yip, an Associate Dean of HKU’s Faculty of Social Sciences and the corresponding author, has publicly acknowledged the concerns surrounding the publication. This acknowledgment underscores the seriousness with which academic institutions are treating potential AI-related misconduct.

HKU has been equally proactive, emphasizing its strict policies regarding artificial intelligence in research. The university has initiated standard investigative procedures to examine the allegations of academic misconduct, promising appropriate actions if any wrongdoing is confirmed.

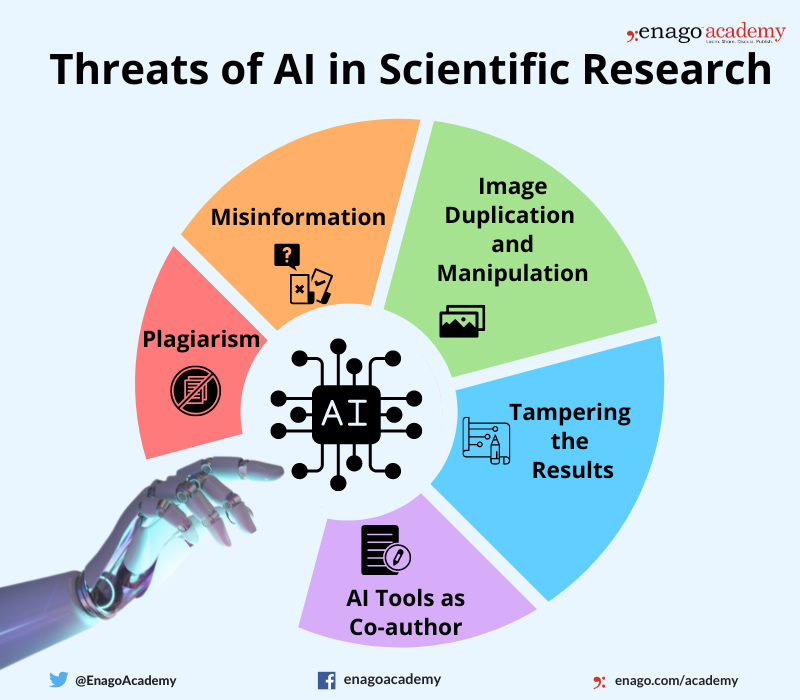

Beyond this specific incident, the case highlights broader concerns emerging in the academic landscape. As AI technology becomes increasingly accessible, researchers and institutions are grappling with the potential for fabricated data, references, and content. The technology that promises to enhance research efficiency also presents significant ethical challenges.

This situation serves as a critical reminder of the need for robust guidelines and oversight in academic research. While AI tools can be incredibly powerful research assistants, they must be used responsibly and transparently. The potential for misuse threatens the fundamental principles of scholarly work: accuracy, originality, and intellectual integrity.

The investigation into Bai Yiming’s paper represents more than just an isolated incident. It is a pivotal moment that could establish precedents for how academic institutions and publishers address AI-related research misconduct. The outcome will likely influence future policies and practices surrounding technological innovation in scholarly work.

As AI continues to evolve and integrate into research processes, the academic community must remain vigilant. Developing comprehensive frameworks that leverage AI’s potential while mitigating its risks will be crucial. This requires ongoing dialogue, clear ethical guidelines, and a commitment to maintaining the highest standards of academic scholarship.

The HKU case is a stark reminder that technological advancement must always be balanced with rigorous ethical considerations. It challenges researchers, publishers, and institutions to be proactive, transparent, and accountable in their approach to emerging technologies.